accesses since January 23, 2008

accesses since January 23, 2008

copyright notice

copyright notice

link to published version: Communications of the ACM, April, 2008

link to published version: Communications of the ACM, April, 2008

accesses since January 23, 2008

accesses since January 23, 2008

Hal Berghel

IT security has received increased attention over the past few decades primarily, but not exclusively, due to the increased threat from viruses, worms, password crackers, Trojan horses, and a cornucopia of other types of malware and exploits. As a consequence of this increased attention, a variety of security models have been proposed. Security-in-depth (SID) is one such example. Winn Schwartau's time-based security is another. In this column I offer another modest example extrapolated from popular culture: Faith-Based Security, aka "no network left behind."

SECURITY MODELS

By their very nature, security models are usually out of date. Security modeling is akin to driving forward while looking through the rear-view mirror since security systems are primarily reactive. The problem is illustrated by zero-day exploits where the first appearance of an exploit coincides with the first appearance of a vulnerability. One of the grand challenges in future digital security is to figure out how to model the unknown in anticipation of post modern exploits such as zero-day attacks and so-called "super worms."

Security models also tend to be obtuse. Though “security-in-depth” is a household phrase in IT circles, few could define it precisely. The phrase has been used to describe everything from cascaded network defenses and layered intrusion prevention/detection systems to differentiated password control policies. About the only common theme I can detect is that security-in-depth seems to be used interchangeably with "more-is-better."

THE SECURITY-IN-DEPTH FALLACY

There is an interesting fallacy in informal logic called the principle of vacuous alternatives. It goes something like this: Take any sentence. If the negation of that sentence seems preposterous, then the original sentence is likely vacuous. As an example, consider "I believe in justice." The negation, "I don't believe in justice," seems like an absurd remark. It's not that it's nonsensical. Rather, it has no conversational contribution to make as it's hard to imagine how any reasonable person could disagree with it. Vacuous propositions behave like semantic tautologies.

Such is the case with security-in-depth. Have you ever heard an IT professional champion the cause of "superficial security", "shallow security," or "myopic security?" Not likely. This is the primary reason why security-in-depth is so poorly understood. It's vagueness quickly gives way to vacuousness on inspection.

SECURITY-THROUGH-OBSCURITY

I admit that a prima facie case could be made for security-in-depth even in the naïve sense of "more-is-better." When I propose adding a new vitamin to my diet, my internist tells me "at this point there is no physiological evidence that suggests that this substance is harmful to humans, so knock yourself out." As with my vitamins, a random application of security applications and systems is unlikely to do any more harm than lure one into a false sense of security, and perhaps slow things down a bit. And like the vitamins, when carefully and judiciously applied and evaluated in a controlled experimental setting, even naive security-in-depth can be of some value.

Such is not the case with our third model: security-through-obscurity. No prima facie case may be made here. The general premise of security-through-obscurity is that inviolability is a consequence of the enigmatic. Of course this is same sort of reasoning that helped the Imperial Japanese Navy and German Wehrmacht become the global powers they are today. The Japanese Purple and JN-25 codes, and the German Enigma cipher system, were assumed to be inviolate precisely because of their hidden complexity. As far back as the 1880's, August Kerckhoff proposed that no cryptographic system that purports to be secure should be predicated on the assumption that no one would ever figure out how it worked - rather the emphasis should be robustness of the procedure and key strength. Both Axis powers failed to comprehend the weakness of security-through-obscurity. This observation also speaks in favor of the robustness of the open source software.

Despite our intuitions, many software systems have adopted STO to their cost. To illustrate:

Windows buffer overflows such as the IDQ.DLL overflow in Code Red Worm were entirely predictable to anyone who knew how the Windows ISAPI extensions worked. This was a design defect that produced a buffer overflow and ran the malware with elevated privileges since IDQ.DLL runs within Inetinfo.exe as local administrator. It was assumed that no one would notice the inadequate bounds and error checking built into the operating system. We'll place this in STO category I: failure to write secure code. Conceptually similar vulnerabilities like format string attacks (printf) in Unix and SQL compromises in Windows (IIS/RDS) would also fall into our first category.

Another example is the entire suite of 802.11 security vulnerabilities. In this case, the defect was actually built into the standards. Nowhere is this more evident than with the wired equivalent privacy (WEP) protocol.

WEP has many "issues" that go beyond our current interests. However, one stands out as a paradigm case of a mistake carried through to perfection: the sloppy implementation of the RC4 symmetric, stream cipher. The faulty WEP algorithm was a part of the original IEEE 802.11 protocol specification.

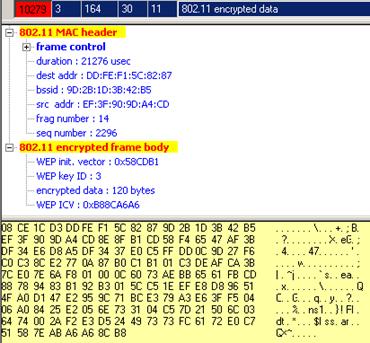

Generally, WEP works like this. The RC4 algorithm uses pseudo-random generation algorithm (PRGA) to produce a key-stream of bits that are XORed with the plaintext to create the ciphertext. Key-change is accomplished by adding an Initialization Vector (IV) that makes each packet key unique. The IV is concatenated with the WEP key to form the WEP seed.

The properties of the IV are interesting:

The community of FMS (after Fluher, Mantin, and Shamir) attack analysts reacted immediately. In short order a flurry of successful WEP-cracking tools were developed ( WEPAttack, WepCrack, Aircrack, WepLab, WEPWedgie, etc.) all made possible by the faulty implementation of RC4. A virtual cottage industry was made possible because the original WEP security standard followed the security-through-obscurity model. We will put the WEP vulnerability into our new STO Category II: botched implementations.

The WEP Initialization Vector is communicated in cleartext (e.g., 0x58CDB1).

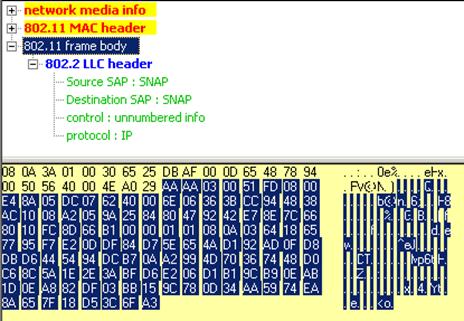

Figure 2: The 802.11 Frame Body always begins with a SNAP Header (e.g., AA) .

One might think that the frailty of WEP would have triggered a total re-think of WiFi security. Such is not the case. While WEP's successor, Wireless Protected Access (WPA) did strengthen the integrity checking algorithm and key management, it basically just added another layer of obscurity over the sloppily designed WEP in the form of a shell over the RC4 algorithm. Deployed by the Wi-Fi Alliance in 2002, WPA didn't really eliminate the key management problem inherent in WEP, but rather proliferated the number of keys involved. WPA uses a pairwise master key (PMK) to generate additional keys that are combined with sender MAC address, packet sequence number, the wireless' Service Set ID, and SSID length, etc. as grist for the hashing mill (PKCS #5 v. 2.0). Let's think about this. If an underlying procedure is faulty, does it become less faulty if we use it over and over and over again? WPA relied on security-through-obscurity just like its predecessor. Predictably, within a year of release, a successful WPA attack was discovered. Shortly therafter, the WPA cracking utility coWPAtty was released that reverse engineers the PMK from the SSID, SSID length, sequence number MAC address, and WiFi security was back at the starting block.

Neither was the Extensible Authentication Protocol immune. Cisco's version of EAP, LEAP, deserved the term Lightweight. LEAP's major fault was that it relied on the MS-CHAPv2 hashing algorithm for authentication. MS-CHAPv2 does not use "salt," so the same plaintext value will always produce the same hashed value. This makes EAP-LEAP vulnerable to dictionary and replay attacks. Once again, the defense of EAP-LEAP ultimately relied on no one finding out how the system works. August Kerckhoff could have predicted this without ever seeing a computer.

My final example came to my attention within the past few weeks. MIFARE is an proprietary encryption technique for RFID (Radio Frequency Identification) developed by Philips and Siemens in the late 1990's. MIFARE is an attempt to cryptographically secure the now-ubiquitous RFID space which relies on RF transmission for communication between transmitter and receiver.

Following the common theme, the security of the proprietary MIFARE system is predicated on the belief that no one will discover how it works. And, as one might predict, some MIFARE circuits were reverse-engineered down to the gate level. The result was the discovery that the random number generation that drove the encryption resulted from a 16-bit key linear feedback shift register based on a master key and a time signature. With RFID sniffing via an open PICC (proximity integrated contactless chip) card and a logic analyzer, it is possible to discern patterns in the challenge-response authentication procedure that can be used in a replay attack, and from there it is possible to recover the key from the value of the unique identifier and the observed behavior of the shift register in the authentication process. We'll create STO category III for this MIFARE vulnerability: turning chip designers loose with CAD/CAM software without adequate education and training.

FAITH-BASED SECURITY

Of course, examples of failed security-through-obscurity could fill a weighty tome. I've mentioned three. These examples highlight the consequences of building deficiencies into the design of things, or at least unwittingly including them. The flaws would likely have been detected and reported had the code, system, or chipset had been carefully analyzed during impartial peer review by qualified professionals.

But I don't want to leave this critical view of deficiencies at the feet of naïve defense-in-depth or security-through-obscurity. I'm looking for first principles here.

I'll refer to the common elements between them under the general rubric of faith-based security - in the most secular sense of this popular phrase of course. The only thing these two security models have going for them is the unsupportable and unjustified faith that they are reliable. These are manifestations of the technologist succumbing to the self-deception that secrecy and tight lips will cover all design misjudgments. This reminds me of an episode of the Australian television series, The Games, where the contractor of the Olympic stadium accidentally made the 100 meter track 94 meters long but didn't tell anyone because he thought no one would notice. He only admitted his mistake when it was pointed to him that world Olympic sprinting records would begin to fall to K-12 athletes.

I propose that faith-based security enter our vocabulary as the default model of IT security. Let's get the faith-based orientation of naïve security-in-depth and security-through-obscurity up front where it belongs. Think of the advantages. If an auditor asks what we were smoking when we decided to place our Internet web server on the inside of our enterprise firewall, we report that we have faith in our Internet comrades. Faith is a predicate of propositional attitude like belief, want, and desire. If someone says they have faith in something, one can't say "No you don’t," at least not until someone comes up with a 'cerebroscope' that can read thoughts. The auditor doesn't have faith, we do have faith; half-empty, half-full. You get the idea.

Since the integrity of an FBS security implementation is by definition taken on faith, we hold the position that whatever policies and procedures are discovered by an auditor were actually intended. So what if our corporate mailer is running on an operating system that hasn't been supported since perestroika -- we have faith in good old "digital iron." After all, when's the last time you read about some hacker compromising OS/2 or Multix? So the primary remote access to our file server is TFTP - our spin is that any protocol that old is "time-tested." So our password security policy requires LASTNAME followed by YEAR - we emphasize that we have a rule for password expiration built right into our password security policy.

No baselines to measure, no checklists to distract us, no concern over best practices, no specific objectives to define. COBIT? Out the window. FISCAM? Who needs it. SOX, HIPAA, GLB - fuggettaboudit!

So the next time someone challenges your organization's security model, rather than beating around the bush, making excuses, blaming budgetary woes, faulting management's lack of vision, chastising vendors, etc., think outside the box. State up front that it's your security model is faith-based and take a swerve around all of the minutiae. Treat these details like all of those log files that you haven't reviewed since you upgraded to NT Service Pack 2. Build in backward "time basing" to the ultimate IT apocalypse - the implosion of the commercial Internet. After that, who will care about digital security anyway?

URL PEARLS

When it comes to digital security systems, secrecy is indeed the mother of dysfunction.

The security vulnerabilities above were real and betray only the slightest hint of literary hyperbole. For those who are interested in the details, two of the security-through-obscurity examples were covered in earlier Digital Village columns: the Code Red Worm was discussed in December, 2001; and Wireless Infidelity appeared in December 2004 and again in August, 2005. The RFID MIFARE exploit was presented December 27, 2007 at the 24th Chaos Communication Congress ( events.ccc.de/congress/2007). At this writing a video of the presentation by Karsten Nohl and Henryk Plotz was available online at video.google.com/videoplay?docid=4252367680974396650&hl=en.

Hal Berghel is an educator, administrator, inventor, author, columnist, lecturer and sometimes talk show guest. He is both an ACM and IEEE Fellow and has been recognized by both organizations for distinguished service. He is the Associate Dean of the Howard R. Hughes College of Engineering at UNLV, and his consultancy, Berghel.Net, provides security services for government and industry.